Thursday, September 23, 2010

Leadership Quotes and Ideas

7 Rules to Create a Great Business

2. Narrow your focus and devote your resources to being best in world at only one thing.

3. Train and develop your team relentlessly because the growth of your sales is a reflection of the growth of your people.

4. Take care of people and the money will take care of itself.

5. Put your most valuable resources on your most valuable opportunities.

6. Get your product right so that people are awed by it

7. Commit to operational excellence.

Tuesday, August 31, 2010

Wednesday, June 30, 2010

Multidimensional Expressions (MDX): PART 1

Microsoft SQL Server OLAP Services provides architecture for access to multidimensional data. This data is summarized, organized, and stored in multidimensional structures for rapid response to user queries. Through OLE DB for OLAP, a PivotTable Service provides client access to this multidimensional online analytical processing (OLAP) data. For expressing queries to this data, OLE DB for OLAP employs full-fledged, highly functional expression syntax: multidimensional expressions (MDX).

We are assuming the reader is familiar with multidimensional data warehousing and OLAP terms. Before talking about MDX and how it queries data, it is worthwhile to give a brief description of the structure of a cube.

Cubes:

Cubes are key elements in online analytic processing. They are subsets of data from the OLAP store, organized and summarized into multidimensional structures. These data summaries provide the mechanism that allows rapid and uniform response times to complex queries.

Cubes are the main objects in online analytic processing (OLAP), a technology that provides fast access to data in a data warehouse. A cube is a set of data that is usually constructed from a subset of a data warehouse and is organized and summarized into a multidimensional structure defined by a set of dimensions and measures.

The fundamental cube concepts to understand are dimensions and measures.

Dimensions provide the categorized descriptions by which the measures are separated for analysis such as Customers, geographical information and Products etc.

Measures identify the numerical values that are summarized for analysis, such as price, cost, or quantity sold.

A dimension can be created for use in an individual cube or in multiple cubes. A dimension created for an individual cube is called a private dimension, whereas a dimension that can be used by multiple cubes is called a shared dimension.

Pre-calculated summary data called aggregations provides the mechanism for rapid and uniform response times to queries. Aggregations are created for a cube before end users access it. The results of a query are retrieved from the aggregations, the cube's source data in the data warehouse, a copy of this data on the Analysis server, the client cache, or a combination of these sources. An Analysis server can support many different cubes, such as a cube for sales, a cube for inventory, a cube for customers, and so on.

Every cube has a schema, which is the set of joined tables in the data warehouse from which the cube draws its source data. The central table in the schema is the fact table, the source of the cube's measures. The other tables are dimension tables, the sources of the cube's dimensions.

Each cube dimension can contain a hierarchy of levels to specify the categorical breakdown available to users. For example, a Store dimension might include the following level hierarchy: Country, State, City, and Store Name. Each level in a dimension is of a finer grain than its parent. Similarly, the hierarchy of a time dimension might include levels for year, quarter, and month. Multiple hierarchies can exist for a single dimension.

For Example: The fiscal period hierarchy levels Fiscal Year, Fiscal Quarter, and Month. The calendar hierarchy levels Calendar Year, Calendar Quarter, and Month.

One final important item of note is the concept of a member. A member is nothing more than an item in a dimension or measure. A calculated member is a dimension member whose value is calculated at run time using a specified expression. Calculated members can also be defined as measures. Only the definitions for calculated members are stored; values are calculated in memory when needed to answer a query.

Getting Starters with MDX:

Let's start by outlining one of the simplest forms of an MDX expression, bearing in mind this is for an outline of an expression returning two cube dimensions:

SELECT (query) axis specification ON COLUMNS,

(query) axis specification ON ROWS

FROM cube_name

WHERE (slicer) axis specification

A SELECT clause that determines the query axes of an MDX SELECT statement. A FROM clause that determines which cube will be queried, An Optional WHERE clause that determines which members or tuples to use on the slicer axis to restrict the data returned.

Comparing the Syntax of the MDX SELECT Statement to SQL

The syntax format for the MDX SELECT statement is similar to that of SQL syntax. However, there are several fundamental differences:

1. MDX syntax distinguishes sets by surrounding tuples or members with braces (the { and } characters.)

2. MDX queries can have 0, 1, 2 or up to 128 query axes in the SELECT statement. Each axis behaves in exactly the same way, unlike SQL where there are significant differences between how the rows and the columns of a query behave.

3. As with an SQL query, the FROM clause names the source of the data for the MDX query. However, the MDX FROM clause is restricted to a single cube. Information from other cubes can be retrieved on a value-by-value basis by using the LookupCube function.

4. The WHERE clause describes the slicer axis in an MDX query. It acts as something like an invisible, extra axis in the query, slicing the values that appear in the cells in the result set; unlike the SQL WHERE clause it does not directly affect what appears on the rows axis of the query. The functionality of the SQL WHERE clause is available through other MDX functions such as the FILTER function.

SELECT Statement Example

The following example shows a basic MDX query that uses the SELECT statement. This query returns a result set that contains the 2002 and 2003 sales and tax amounts for the Southwest sales territories.

SELECT

{ [Measures].[Sales Amount],

[Measures].[Tax Amount] } ON COLUMNS,

{ [Date].[Fiscal].[Fiscal Year].&[2002],

[Date].[Fiscal].[Fiscal Year].&[2003] } ON ROWS

FROM [Adventure Works]

WHERE ( [Sales Territory].[Southwest] )

In this example, the query defines the following result set information:

1. The SELECT clause sets the query axes as the Sales Amount and Tax Amount members of the Measures dimension, and the 2002 and 2003 members of the Date dimension.

2. The FROM clause indicates that the data source is the Adventure Works cube.

3. The WHERE clause defines the slicer axis as the Southwest member of the Sales Territory dimension.

For more learning on MDX kindly wait for next article………….. Multidimensional Expressions (MDX): PART 2

Monday, June 28, 2010

Transact-SQL Improves Database Error-Handling

The @@Error Function

Upon the completion of any T-SQL statement, SQL Server sets the @@ERROR object. If the statement was successful, @@ERROR is set to 0, otherwise it is set to the designate error code.

All SQL Server error codes can be found within the master.dbo.sysmessages system table. One important thing to remember is that @@ERROR is cleared each time a statement is executed. It is a good practice to store the value within a local variable.

Anatomy of an Error

All errors raised by SQL Server return the following information.

• Number - Each error has a unique number assigned to it.

• Message - Contains information about the error. Many errors have substitution variables that can be placed within the text. We will cover this in the SP_ADDMESSAGE and RAISERROR sections.

• Transact-SQL Improves Database Error-Handling - Indicates how serious the error is. The values are between 1 and 25.

• State - As quoted from SQL Server books on line: "Some error codes can be raised at multiple points in the source code for SQL Server. For example, an 1105' error can be raised for several different conditions. Each place the error code is raised assigns a unique state code. A Microsoft support engineer can use the state code from an error to find the location in the source code where that error code is being raised, which may provide additional ideas on how to diagnose the problem."

• Procedure name - If the destruction occurred within a stored procedure, the name is returned.

• Line - The line number of the demon code.

There are two classes of error messages in SQL Server -- fatal and nonfatal. Remember that fatal errors will kill the client connection. Creating stored procedures that cause fatal errors is a good way to get rid of slacking programmers or consultants.

SP_ADDMESSAGE

Use the sp_addmessage feature to add your own customized messages. The following information is given about each parameter:

• @msgnum (smallint) - This is the message ID. All user-defined messages start with 50001. The combination of this parameter and language must be unique.

• @severity (smallint) - The severity level is between 1 and 25. Only the system administrator can add a message above the level of 18. Messages below the age of 18 are still considered to be "toddlers," and anyone can add them.

• @msgtext nvarchar(255) - This is the text of the error message. Variables can be used within the text. This functionality is similar to the printf feature within C.

• @lang - Since SQL Server can support different languages, you can store your error messages in multiple languages. This will help greatly when we start joint-developing with people from different planets. If left NULL, it will default to the default language of the current session.

• @with_log varchar(5) - This value can be TRUE or FALSE. If you set this parameter to "TRUE," then messages are written to the Windows and SQL Server application log.

• @replace varchar(7) - This allows you to replace an existing error message with a new message text and severity level. The default is NULL. This is a great way to tick the database administrator off!

RAISERROR

You can also use the RAISERROR command to create an error message. RAISERROR can send the error message information back to a client application.

The following information is given about the parameters.

• @msg_id - This is the message ID of your user-defined message. All adhoc error messages are given the message ID of 50000.

• @msg_str - The message can have up to 400 characters. If the message contains more than 400 characters, only the first 397 will be displayed and an ellipsis will be added to indicate that the message has been cut. You can also use formatting values within the message text. An example of this is given below.

• @argument - These are values to be used within the message text.

• @WITH - Acceptable values are LOG, NOWAIT, and SETERROR. The LOG option logs the error to the server and application log; NOWAIT sends the messages immediately back to the client application; and SETERROR sets the @@ERROR value to the @MSG_ID or 50000, regardless of the serverity level.

Sample Code

The User-defined Error Message

For the formatting, I will use %s for strings and %I for integers. This functionality is similar to the printf function in C. I know you still have those C notes from college somewhere!

USE master

EXEC sp_addmessage

50010, /*message id*/

16, /*severity level*/

'Error in stored procedure %s', /*message text*/

'us_english', /*language*/

'FALSE', /*log this error*/

NULL /*replace existing error*/

Using RAISERROR to Call the Error Message

I will use the pubs database for this demonstration.

Step 1. Create the following procedure.

CREATE PROCEDURE spDemo

AS BEGIN

SELECT TOP 10 * FROM AUTHORS

IF @@ROWCOUNT <>

Step 2. Execute the procedure.

Exec spDemo

You will then get the following error message.

"Server: Msg 50010, Level 12, State 1, Procedure spDemo, Line 5 Error in stored procedure Raise Error Demo"

Here is another example of using RAISERROR without a user-defined message.

RAISERROR ('An error occurred because we are overworked and underpaid!',10,1)

Real-World Use of @@ERROR and RAISERROR

Although common practice tells us that user validation goes on the front end, most database administrators (DBA) implement developer validation of the back -end. Experience has taught them that we cannot always be trusted to implement requirements correctly. Another valid reason would be that your database is being used by several different applications, and as an added precaution the DBA has decided to implement database-level validation.

Step 1. Create the following procedure in the pubs database.

CREATE PROCEDURE spDiscounts(@TYPE VARCHAR(40),

@STORE CHAR(4),@LOW SMALLINT, @HIGH SMALLINT, @DISCOUNT NUMERIC(9,2))

AS BEGIN

IF @DISCOUNT > 7

BEGIN

RAISERROR ('You entered %d, the discount can not be greater than 7.', 10, 1, @DISCOUNT)

END

ELSE

BEGIN

BEGIN TRANSACTION

INSERT INTO DISCOUNTS(DISCOUNTTYPE, STOR_ID, LOWQTY, HIGHQTY, DISCOUNT)

VALUES (@TYPE,@STORE,@LOW,@HIGH,@DISCOUNT)

IF @@ERROR <> 0

ROLLBACK TRANSACTION

ELSE

COMMIT TRANSACTION

END

END

Step 2. Execute the following procedure.

exec spDiscounts 'My Discount', NULL, 10, 100, 12

You will receive the following error message:

"You entered 12; the discount cannot be greater than 7."

Returning logical error messages like this to the client application will save hours of head scratching.

Try…….Catch Block

A TRY…CATCH construct catches all execution errors that have a severity higher than 10 that do not close the database connection.

A TRY block must be immediately followed by an associated CATCH block. Including any other statements between the END TRY and BEGIN CATCH statements generates a syntax error.

A TRY…CATCH construct cannot span multiple batches. A TRY…CATCH construct cannot span multiple blocks of Transact-SQL statements. For example, a TRY…CATCH construct cannot span two BEGIN…END blocks of Transact-SQL statements and cannot span an IF…ELSE construct.

If there are no errors in the code that is enclosed in a TRY block, when the last statement in the TRY block has finished running, control passes to the statement immediately after the associated END CATCH statement. If there is an error in the code that is enclosed in a TRY block, control passes to the first statement in the associated CATCH block. If the END CATCH statement is the last statement in a stored procedure or trigger, control is passed back to the statement that called the stored procedure or fired the trigger.

GOTO statements cannot be used to enter a TRY or CATCH block. GOTO statements can be used to jump to a label inside the same TRY or CATCH block or to leave a TRY or CATCH block.

The TRY…CATCH construct cannot be used in a user-defined function.

Retrieving Error Information

In the scope of a CATCH block, the following system functions can be used to obtain information about the error that caused the CATCH block to be executed:

ERROR_NUMBER() returns the number of the error.

ERROR_SEVERITY() returns the severity.

ERROR_STATE() returns the error state number.

ERROR_PROCEDURE() returns the name of the stored procedure or trigger where the error occurred.

ERROR_LINE() returns the line number inside the routine that caused the error.

ERROR_MESSAGE() returns the complete text of the error message. The text includes the values supplied for any substitutable parameters, such as lengths, object names, or times.

These functions return NULL if they are called outside the scope of the CATCH block. Error information can be retrieved by using these functions from anywhere within the scope of the CATCH block.

Errors Unaffected by a TRY…CATCH Construct

TRY…CATCH constructs do not trap the following conditions:

1. Warnings or informational messages that have a severity of 10 or lower.

2. Errors that have a severity of 20 or higher that stop the SQL Server Database Engine task processing for the session. If an error occurs that has severity of 20 or higher and the database connection is not disrupted, TRY…CATCH will handle the error.

3. Attentions, such as client-interrupt requests or broken client connections.

4. When the session is ended by a system administrator by using the KILL statement.

The following types of errors are not handled by a CATCH block when they occur at the same level of execution as the TRY…CATCH construct:

1. Compile errors, such as syntax errors, that prevent a batch from running.

2. Errors that occur during statement-level recompilation, such as object name resolution errors that occur after compilation because of deferred name resolution.

Wednesday, June 23, 2010

Metadata for Data Warehousing and Business Intelligence

What is Metadata?

How can Metadata be Managed?

Extracting Metadata from Legacy Systems

What is Metadata?

Metadata is your control panel to the data warehouse. It is data that describes the data warehousing and business intelligence system:

1. Reports

2. Cubes

3. Tables (Records, Segments, Entities, etc.)

4. Columns (Fields, Attributes, Data Elements, etc.)

5. Keys

6. Indexes

Metadata is often used to control the handling of data and describes:

1. Rules

2. Transformations

3. Aggregations

4. Mappings

The power of metadata is that enables data warehousing personnel to develop and control the system without writing code in languages such as: Java, C# or Visual Basic. This saves time and money both in the initial set up and on going management.

Data Warehouse Metadata

Data warehousing has specific metadata requirements. Metadata that describes tables typically includes:

1. Physical Name

2. Logical Name

3. Type: Fact, Dimension, Bridge

4. Role: Legacy, OLTP, Stage,

5. DBMS: DB2, Informix, MS SQL Server, Oracle, Sybase

6. Location

7. Definition

8. Notes

Metadata describes columns within tables:

1. Physical Name

2. Logical Name

3. Order in Table

4. Datatype

5. Length

6. Decimal Positions

7. Nullable/Required

8. Default Value

9. Edit Rules

10.Definition

11.Notes

How can Data Warehousing Metadata be Managed?

Data warehousing and business intelligence metadata is best managed through a combination of people, process and tools.

The people side requires that people be trained in the importance and use of metadata. They need to understand how and when to use tools as well as the benefits to be gained through metadata.

The process side incorporates metadata management into the data warehousing and business intelligence life cycle. As the life cycle progresses metadata is entered into the appropriate tool and stored in a metadata repository for further use.

Metadata can be managed through individual tools:

Metadata manager / repository

Metadata extract tools

Data modeling

ETL

BI Reporting

Metadata Manager / Repository

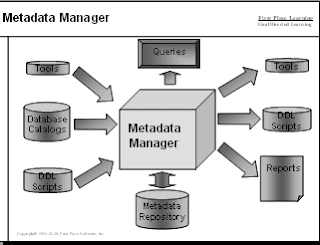

Metadata can be managed through a shared repository that combines information from multiple sources.

The metadata manager can be purchased as a software package or built as "home grown" system. Many organizations start with a spreadsheet containing data definitions and then grow to a more sophisticated approach.

Extracting Metadata from Input Sources

Metadata can be obtained through a manual process of keying in metadata or through automated processes. Scanners can extract metadata from text such as SQL DDL or COBOL programs. Other tools can directly access metadata through SQL catalogs and other metadata sources.

Picking the appropriate metadata extract tools is a key part of metadata management.

Many data modeling tools include a metadata extract capability - otherwise known as "reverse engineering". Through this tool, database information about tables and columns can be extracted. The information can then be exported from the data modeling tool to the metadata manager.

Wednesday, March 31, 2010

What's New (Reporting Services 2008?) – Part I

Report Authoring

Introduce tablix, chart, and gauge data regions. It also introduces support for richly formatted text, new data source types, and Report Builder 2.0, which offers many new features, like enhanced data layout and visualization, in an Office-like authoring environment. Finally, this topic describes incremental changes to authoring tools and the Report Definition Language (RDL) that allow a report author to take full advantage of new processing features.

1. New and Enhanced Data source type:

Included in SQL Server 2008 Reporting Services (SSRS) are new data processing extensions that enable you to report from the Teradata data source.

2. Enhanced Chart Data Region:

The new Chart data region supports a richer set of data visualization features. The new chart types include bar/column cylinder, pyramid, funnel, polar, radar, stock, candlestick, range column, range bar, smooth area, smooth line, stepped line, and box plot chart types. There is also built-in support for Pareto and Histogram charts.

A series can be displayed on any specified chart area and in any specified legend. You can insert empty points in place of missing data. You can combine multiple chart areas, multiple legends, and multiple titles on the same chart.

There is new support for secondary axes, which enable you to display multiple series using more than one axis. On each axis, you can set options to add scale breaks, logarithmic scales, custom axis intervals, and interlaced strip lines.

The new Chart data region supports automatic interval labeling to avoid label collisions, customizable rotation angles, font size and text-wrap properties for axis label calculations, support for axis crossing at custom positions, and support for background strip lines at regular or custom intervals.

You have more control to label, align and color your chart. Smart labels for data points adjust text to avoid label collisions. Custom color palettes and automatic alignment of multiple chart areas make it easier to improve the appearance and layout of charts in your report. You can collect small slices on a pie chart into one slice, drill down or show ToolTip information on individual data points, and place stacked bars or columns side-by-side in any number of stacks. The pie, doughnut, bar, and column charts support 2D and 3D drawing effects.

New design and run-time support for automatically calculated series on the chart are introduced in this release. Reporting Services provides built-in functions for 15 commonly used calculations, including statistical analysis, moving averages, and financial indicators. You can select a formula to create a new series of data points that is calculated at run time.

The user interface has been enhanced to make it easier to work with chart types. There are new dialog boxes for each chart element, shortcut menus for each chart element, support for text editing directly on the chart for titles and labels, a new chart type selector, and the ability to drag, drop, and rearrange fields in the drop-zone of a chart. This enables you to easily change the order in which series appear on a chart.

3. New Gauge Data Region:

The new Gauge data region is most often used to provide a high-level summary of your data by highlighting key performance indicator (KPI) values. The gauge uses a pointer to show a single value. You can add a range to highlight a subset of values on your scale and control the size and positioning of the scale on the gauge to create different visual effects.

The Gauge data region is a gauge panel that contains one more or gauges. You can combine multiple gauges into a gauge panel to show single values side by side. You can apply filtering or sorting on the gauge panel. You can choose between radial and linear gauge types. There is a specific thermometer gauge type to visualize temperature data. You can select a bullet graph from the list of linear gauges.

4. New Tablix Data Region:

The new Tablix data region is a generalized data region item that combines features of both a table and a matrix. In SQL Server 2008 Reporting Services (SSRS), the Tablix data region replaces the Table, Matrix, and List data regions by combining their features into one flexible grid layout. In RDL syntax, the Tablix element replaces the Table, Matrix, and List elements from previous RDL schemas.

The Tablix data region improves report layout flexibility and provides a more consistent rendering behavior. It uses a flexible grid layout to support multiple row groups and column groups. Groups are organized on a row hierarchy and a column hierarchy. Groups can be nested, adjacent, or recursive. After you add a Tablix data region, you can use the Grouping pane to quickly and easily create row and column groups, and add totals, subtotals, and grand totals. The Tablix data region automatically adjusts to include rows and columns on which to display group and summary data.

Tablix opens up new possibilities for working with complex and aggregated data, but does not prevent you from working with simple table and matrix structures. Report Designer provides three templates for the Tablix data region: Table, Matrix, and List, which you can drag from the Toolbox and use as a starting point for your reports. From here, you can design complex reports that integrate features of the various report types. In a tablix cell, you can embed any report item, including another data region.

5. New Enhancements for Text and HTML:

The text box report item has been enhanced. You can mix fonts and colors, add bold and italic styles, and use paragraph styles such as alignment and hanging indents. You can format an entire text box or you can format specific text, numbers, expressions, or fields within the text box. You can also import basic HTML from a field in your database for display in the report. You can create mail merges or template reports that mix data from a database with literal text on the design surface. The new release also provides full support for international complex scripts.

Report authors who use expressions in their reports will notice that simple expressions appear on the design surface as placeholder text. For example, in a text box on the design surface, the expression =Fields!Sales.Value is displayed as [Sales].

6. Enhancements In Report Designer:

The most noticeable change to the Report Designer work area is that the Data tab in Report Designer has been replaced with a Report Data pane that organizes all report data into one place that is always visible as you create the report layout. The Report Data pane shows you data sources, datasets, parameters, images, and built-in fields. Query designers can be accessed from the dataset.

The design surface supports a richer designer experience. Rulers show the current report item position and visible snap lines while you drag and resize items.

Other changes include a new Grouping pane that provides a convenient way to create groups for a Tablix data region, and new property dialog boxes to set properties for report items.

7. Report Builder 2.0:

The new release of Microsoft SQL Server 2008 Report Builder 2.0 offers many new features, like enhanced data layout, visualization and text formatting, and on-demand rendering. Report Builder 2.0 leverages your experience with Office 2007 products by featuring an Office-like authoring environment. You can create reports with multiple data regions, pull data from multiple data sources into a single report, and directly query relational and multidimensional data sources.

You can customize and update all existing reports, regardless of whether they were designed in Report Designer or in the previous version of the tool, Report Builder 1.0, which was installed with SQL Server 2005. Report Builder 1.0 will continue to be installed with Microsoft SQL Server 2008.

You can install the new Report Builder 2.0 separately from the Web. This version of Report Builder 2.0 is a stand-alone version that you or your administrator installs directly on your computer.

SQL Server 2008 PCU1 provides a ClickOnce version of Report Builder 2.0. The ClickOnce version can be used with Reporting Services in native mode or SharePoint integrated mode. You can start Report Builder ClickOnce from Report Manager or a SharePoint library.

8. New and Enhanced Report Items and RDL Schema:

Report Definition Language (RDL) defines the syntax for a Reporting Services report. In this release, major changes to RDL include the addition of Tablix, Chart, and Gauge data regions, which are described in the previous section. However this release also includes incremental enhancements to support the new report processing model and the new report rendering model.

RDL Page Elements

RDL page elements have been redesigned to provide more consistent control over page breaks when you export a report to different renderers. New RDL elements include PageSections, PageSection, and PageBreak. Page headers and footers, page margins, columns, column spacing, the InteractiveHeight element and the InteractiveWidth element have moved from the Report parent element to the Page parent element. New support for KeepTogether and KeepWithGroup has been introduced to support better control over pagination.

Explicit Null Values

The new attribute xsi:nil="true" enables you to distinguish between an element that is explicitly null instead of one that is not specified.

Processing-time Variables

You can declare variables that are global throughout the report or local to particular group scopes. You can use a global variable to calculate an expression one time, and then use that value in expressions throughout the report. The DeferVariableEvaluation element controls whether a variable is evaluated during on-demand processing of the report. Do not set this value if the variable has a time-dependency.

Data type for constants

The Value element has an optional DataType attribute that specifies the data type of the value if it is a constant. If DataType is omitted, a constant uses the String data type. DataType can be Boolean, DateTime, Integer, Float, or String.

Following topics I’ll cover in next article:

• Report Processing and Rendering

• Server Architecture and Tools

• Report Programmability

Thanks,

Krishan

Saturday, March 20, 2010

Overview of SQL Server Reporting Services – PART I

Article Highlights:

· Reporting: Challenges

· Introduction of Microsoft Reporting Solution

· What is Reporting Services?

· Reporting Services Architecture

· Reporting Services Key Concepts

· Report Delivery Mechanisms

· Reporting Services Integration

· Reporting Services Pros & Cons

Reporting Challenges:

· Data resides in many places

· Needed “in” applications

· Users’ Reporting Needs Change

· Web Interface

· Multiple Formats Wanted

· High Scale Reporting

How do I?

We can achieve these challenges to build a report with multiple data sources, enable interactive access to information, enable an end user to build (or modify) their own report, provide reports in a format that end users wants and integrate reporting into applications easily.

Introduction of Microsoft Reporting Solution

Microsoft introduce a server-based reporting platform that you can use to create and manage tabular, matrix, graphical, and free-form reports that contain data from relational and multidimensional data sources. The reports that you create can be viewed and managed over a World Wide Web-based connection.

· A complete set of tools that you can use to create, manage, and view reports.

· Variety of outputs including HTML, PDF, TIFF, Excel, CSV, and more.

Note: SQL Server 2008 SSRS can also prepare reports in (DOC) format.

· Set of APIs.

What is Reporting Services?

Reporting Services is an open and extensible platform supporting the authoring, management and delivery of rich, interactive reports to the entire enterprise. SSRS Enhance Reporting Capabilities:

· Scalable Server

1. Rich, Enterprise Reporting Platform

2. Scalable, Multiple data sources with multiple delivery options

3. manageable Web Services architecture

4. Scheduling, Snapshots, Caching, more…

· Advanced Authoring Tools

· End-user, Ad Hoc Reporting

· Strong Management Story

1. SOAP Web Service APIs

2. Report Manager portal

3. Extensible security model

4. Integration with SSAS, SSIS, management tools

Reporting Services Architecture

Scale-Out Architecture

Report Server Catalog

· The SSRS catalog encompasses two databases: Report Server database (the default name is ReportServer) and Report Server temporary database (the default name is ReportServerTempDB). Report Server database is a SQL Server database that stores part of SSRS configuration, report definitions, report metadata, report history, cache policy, snapshots, resources, security settings, encrypted data, scheduling and delivery data, and extension information.

· Another database that SSRS uses is the Report Server temporary database. This database is responsible for storing intermediate processing products, such as cached reports, and session and execution data.

· Report Server periodically cleans expired and orphan data in ReportServerTempDB.

Reporting Services Key Concepts

· Reporting Services Configuration Manager

· Report Manager

· Report Designer

· Report Model Designer

· Report Builder

· Report Server

· Data Source (.ds)

· Data Source View (.dsv)

· Report Model (.smdl)

· Report Definition (.rdl)

Report Designer

· Integrated into Visual Studio 2005

o Supports all languages (C#, VB, J#)

· Drag & drop metaphor, similar to Windows Forms Designer

· Use tables, lists, charts, matrices, etc. to display data

· Specify grouping, sorting, filtering, etc.

· Style the report by specifying fonts, colors, etc.

· Use expressions to change style dynamically based on data values

· Publishing and deployment features

Report Builder

· Ad hoc Reporting for the End-User

· Click Once Install

· With Report Builder you can:

1. Modify a Report

2. Build a New Report

3. Report on Relational or OLAP data

Report Designer vs. Builder

Modular Designer

· Hosted in Visual Studio 2005 (new project type)

· For generating or editing models used by Report Builder

· Auto-generation of models from data sources

Report Controls

· Controls make it easy to embed reporting functionality into applications

· Windows Forms (rich client) and Web Forms (ASP.NET) control

· Local processing mode (no server) or connected server mode

Report Delivery Mechanisms

· Pull Delivery

1. URL Access

2. Web Services

3. Share point web parts

· Push Delivery

1. Standard Subscription

2. Data Driven Subscription

· Local Delivery

1. Window form control

2. ASP.NET server control

· Ad hoc report via Report Builder

Web Services Interface

· Namespace Management

· Item Properties

· Reports Parameters

· Report Execution

· Data Source Management

· Report History

· Scheduling

· Job Management

· Model Management

· Subscription & Delivery

Reporting Services-Pros

· It is free (provided you have the SQL server license)

· Tight data integration with SQL Server, but it handles anything .NET can (Oracle, ODBC etc...).

· 2008 has native support for Terra data too

· Integration with the Visual Studio, Management studio, SharePoint and PPS.

· It is just a web application though so integration into any web page or application that can talk to a web server is easy too.

· Built in tools to do subscriptions (i.e. emails that get sent out on a regular basis to a list of people with the report on them).

· Export to a variety of formats (XML, CSV, Excel, PDF etc...)

· 2008 adds Doc too

· Ability to design templates which power users can use to build reports without knowing SQL.

Reporting Services-Cons

· Charting controls look like Excel 2003 and are limited.

· 2008 has the Dundas controls in by default so they are much more powerful, more varied and better looking.

· 2008 removes that as it is no longer an IIS web app. It runs it's own web server based off the IIS core but is closer to a stand alone app - so the security issues aren't a problem

· Designer support is a pain. 2000 Reports must be developed in VS 2003, 2005 reports must be developed in VS 2005, 2008 reports must be developed in VS 2008. By Visual Studio I mean the normal one or the thin downed version you get with the SQL Management tools.

· Compatibility. Each version of reporting services can run only the current version and one version back of the reports.

· Security is limited to Integrated Windows or Anonymous

· 2008 has added support for forms based security and for custom providers, like you get with ASP.NET

Features - Out of Article Scope

· SharePoint Web Parts

· Rich Client Printing

· Visual Studio Integration

· Management Studio Integration

· Subscription

· Caching

· Snapshot

· Security management

· New features in SSRS 2008

· Scale-out Reporting Server environment

I’ll cover these features in Overview SSRS PART –II……………………………….

Thanks,

Krishan

Friday, March 19, 2010

SSAS - MOLAP, ROLAP and HOLAP

Introduction

There are three standard storage modes (MOLAP, ROLAP and HOLAP) in OLAP applications which affect the performance of OLAP queries and cube processing, storage requirements and also determine storage locations.

SSAS (2005 and 2008) supports not only these three standard storage modes but supports proactive caching, a new feature with SSAS 2005 which enables you to combine the best of both worlds (ROLAP and MOLAP storage) for both frequency of data refresh and OLAP query performance.

Basic storage modes

The cube data can be divided into three different types - meta-data, detail data and aggregate data. No matter what storage is used, the meta-data will always be stored on the OLAP server but storage of the detail data and aggregate data will depend on the storage mode you specify.

MOLAP (Multidimensional OLAP)

This is the default and most frequently used storage mode. In this mode when you process the cube, the source data is pulled from the relational store, the required aggregation is then performed and finally the data is stored in the Analysis Services server in a compressed and optimized multidimensional format.

After processing, once the data from the underlying relational database is retrieved there exists no connection to the relational data stores. So if there is any subsequent changes in the relational data after processing that will not reflect in the cube unless the cube is reprocessed and hence it is called offline data-set mode.

Since both the detail and aggregate data are stored locally on the OLAP server, the MOLAP storage mode is very efficient and provides the fastest query performance.

Pros

1. Stores the detail and aggregate data in the OLAP server in a compressed multidimensional format; as a result the cube browsing is fastest in this mode.

2. Provides maximum query performance, because all the required data (a copy of the detail data and calculated aggregate data) are stored in the OLAP server itself and there is no need to refer to the underlying relational database.

3. All the calculations are pre-generated when the cube is processed and stored locally on the OLAP server hence even the complex calculations, as a part the query result, will be pefromed quickly.

4 MOLAP does not need to have a permanent connection to the underlying relational database (only at the time of processing) as it stores the detail and aggregate data in the OLAP server so the data can be viewed even when there is connection to the relational database.

5. MOLAP uses compression to store the data on the OLAP server and so has less storage requirements than relational databases for same amount of data. (Note however, that beginning with SQL Server 2008 you can use data compression at relational database level as well).

Cons

1. With MOLAP mode, you need frequent processing to pull refreshed data after last processing resulting in drain on system resources.

2. Latency; just after the processing if there is any changes in the relational database it will not be reflected on the OLAP server unless re-processing is performed.

3. MOLAP stores a copy of the relational data at OLAP server and so requires additional investment for storage.

4. If the data volume is high, the cube processing can take longer, though you can use incremental processing to overcome this.

ROLAP (Relational OLAP)

In comparison with MOLAP, ROLAP does not pull data from the underlying relational database source to the OLAP server but rather both cube detail data and aggregation stay at relational database source. In order to store the calculated aggregation the database server creates additional database objects (indexed views). In other words, the ROLAP mode does not copy the detail data to the OLAP server, and when a query result cannot be obtained from the query cache the created indexed views are accessed to provide the results.

Pros

1. Ability to view the data in near real-time.

2. Since ROLAP does not make another copy of data as in case of MOLAP, it has less storage requirements. This is very advantageous for large datasets which are queried infrequently such as historical data.

3. In ROLAP mode, the detail data is stored on the underlying relational database, so there is no limitation on data size that ROLAP can support or limited by the data size of relational database. In nutshell, it can even handle huge volumes of data.

Cons

1. Compared to MOLAP or HOLAP the query response is generally slower because everything is stored on relational database and not locally on the OLAP server.

2. A permanent connection to the underlying database must be maintained to view the cube data.

Note:

If you use ROLAP storage mode and your relational database is SQL Server, the Analysis Services server may create indexed views for aggregation. However this requires a few prerequisite to be available - for example, the data source must be a table, not a view. The table name must use two part naming convention or it must be qualified with owner/schema name etc. For a complete list of these prerequisites you can refer to the link provided in reference section.

HOLAP (Hybrid OLAP)

This mode is a hybrid of MOLAP and ROLAP and attempts to provide the greater data capacity of ROLAP and the fast processing and high query performance of MOLAP.

In HOLAP storage mode, the cube detail data remains in the underlying relational data store and the aggregations are stored on the OLAP server. If you query only summary data in aggregation, the HOLAP storage mode will work similar to MOLAP. For the detail data queries, HOLAP will drill through the detail data in underlying relational data store and hence performance would not be as good as MOLAP. Therefore, your query would be as fast as MOLAP in if your query result can be provided from query cache or aggregation but performance would degrade if it needs the detail data from relational data store.

Pros

1. HOLAP balances the disk space requirement, as it only stores the aggregate data on the OLAP server and the detail data remains in the relational database. So no duplicate copy of the detail data is maintained.

2. Since HOLAP does not store detail data on the OLAP server, the cube and partitions would be smaller in size than MOLAP cubes and partitions.

Performance is better than ROLAP as in HOLAP the summary data are stored on the OLAP server and queries can be satisfied from this summary data.

HOLAP would be optimal in the scenario where query response is required and query results are based on aggregations on large volumes of data.

Cons

1. Query performance (response time) degrades if it has to drill through the detail data from relational data store, in this case HOLAP performs very much like ROLAP.

Summary

Configuring the storage mode

Select a particular OLAP object in BIDS (Business Intelligence Development Studio), right click on it and then select properties. The property called StorageMode will allow you to set the storage mode to MOLAP, ROLAP or HOLAP.

Friday, March 12, 2010

SSIS - Lookup Cache Modes - Full, Partial, None

For this post, use the following schema and data:

create table fact_sales

(id int identity(1,1),

sales_rep_id int,

sales_dollars decimal(18,2)

)

create table dim_sales_rep

( id int identity(1,1),

first_name varchar(30),

last_name varchar(50)

)

insert into fact_sales (sales_rep_id, sales_dollars) values (1,120.99);

insert into fact_sales (sales_rep_id, sales_dollars) values (2,24.87);

insert into fact_sales (sales_rep_id, sales_dollars) values (3,98.11);

insert into fact_sales (sales_rep_id, sales_dollars) values (4,70.64);

insert into fact_sales (sales_rep_id, sales_dollars) values (4,114.19);

insert into fact_sales (sales_rep_id, sales_dollars) values (4,37.00);

insert into fact_sales (sales_rep_id, sales_dollars) values (5,401.50);

insert into dim_sales_rep (first_name, last_name) values ('K','S');

insert into dim_sales_rep (first_name, last_name) values ('S','D');

insert into dim_sales_rep (first_name, last_name) values ('Larry','White');

insert into dim_sales_rep (first_name, last_name) values ('Carrie','Green');

insert into dim_sales_rep (first_name, last_name) values ('Adam','Smith');

FULL Cache Mode

First, it is always advisable to build a query for the lookup, instead of choosing a table in the Table/View drop-down. The primary reason is so that you can limit the resultset to only the columns needed to perform the lookup as well as return any columns needed downstream, and to have the ability to add a WHERE clause if needed.

The full cache mode will run the specified query (or its own depending on how you assigned the lookup table) and attempt to cache all of the results. It will execute this query very early on in the package execution to ensure that the first set of rows coming out of the source(s) are cached. If SSIS runs out of memory on the machine though, the data flow will fail as the lookup component will not spool its memory overflow to disk. Be cautious of this fact. Once the data is cached, the lookup component will not go back to the database to retrieve its records, so long as the data flow is not restarted. (In SQL Server 2008, you can now reuse lookup caches.)

Using SQL Profiler, you can see that only one database call is made:

declare @p1 int

set @p1=1

exec sp_prepare @p1 output,NULL,N'select sales_rep_id, sales_dollars

from fact_sales',1

select @p1

go

exec sp_execute 1

go

SET NO_BROWSETABLE ON

go

declare @p1 int

set @p1=1

exec sp_prepare @p1 output,NULL,N'select id, first_name, last_name

from dim_sales_rep',1

select @p1

go

exec sp_execute 1

go

exec sp_unprepare 1

go

exec sp_unprepare 1

go

PARTIAL Cache Mode

Partial cache mode will not execute a query immediately at package execution. Instead, it will wait until its first input row arrives. Once the row arrives, whatever lookup value (in this case, sales_rep_id) is being passed in, will get substituted for a parameter, and then SSIS will send the query to the database for retrieval. At this point, all of the data returned will be cached for future lookups. If a new sales_rep_id is encountered, then the query will have to be re-executed, and the new resultset will get added to the lookup cache.

In other words, in the above data, if my source is “select sales_rep_id, sales_dollars from fact_sales”, we should have five database calls made by the lookup component. Even though for sales_rep_id = 4 we have three entries, in partial cache mode the first time we retrieve the lookup records for sales_rep_id = 4, the results will be cached, allowing future occurrences of sales_rep_id = 4 to be retrieved from cache.

This is illustrated in the SQL Profiler data:

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',1

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',2

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',3

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',4

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',5

go

exec sp_unprepare 1

go

In the above data, you can see at the end each sales_rep_id being passed in. Note that we only have one line for sales_rep_id = 4. That’s because the remaining two records were bounced against the lookup cache, avoiding a trip to the database.

NO Cache Mode

Using the NO Cache Mode will essentially tell SSIS that you want each incoming row (from fact_sales in this case) to be bounced against the database. Since we have seven fact_sales rows, we will see seven calls to the database - MOST of the time. It is important to note that even though we are telling the lookup component to avoid caching rows, it will keep the last match in memory and use it for the next comparison. If the next comparison’s key value matches the value still in memory, a database call is avoided, and the value is carried forward.

In our example data above, if we sort by sales_rep_id, we will still only have five calls to the database because after we lookup our first value of sales_rep_id = 4, it will be reused for the subsequent lookups for sales_rep_id = 4. If we sort our data by sales_dollars, we will have six database calls, because only two sales_rep_id = 4 records are together and hence the first lookup is only used once.

Here is a simple table illustrating each no cache example mentioned above:

SALES_REP_ID, SALES_DOLLARS, LOOKUP DATABASE CALL Y or N

1 120.99 Y

2 24.87 Y

3 98.11 Y

4 70.64 Y

4 114.19 N

4 37.00 N

5 401.50 Y

SALES_REP_ID, SALES_DOLLARS, LOOKUP DATABASE CALL Y or N

2 24.87 Y

4 37.00 Y

4 70.64 N

3 98.11 Y

4 114.19 Y

1 120.99 Y

5 401.50 Y

The SQL Profiler data for the second example above is here:

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',2

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',4

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',3

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',4

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',1

go

exec sp_executesql N'select * from (select id, first_name, last_name

from dim_sales_rep) [refTable]

where [refTable].[id] = @P1',N'@P1 int',5

go

Thursday, March 11, 2010

Commit to excellence

....Commit to excellence. Become massively innovative and wear your passion on your sleave. They might call you different or wierd or even crazy. But please remember, every great leader (or visionary or brave thinkier) was initially laughed at. Now they are revered.

Tuesday, March 9, 2010

Principles for Enterprise Data Warehouse Design

However, as with any technology, a DW can quickly become a quagmire if it's not designed, implemented and maintained properly. With this in mind, there are few principles that can help us us start - and keep - our DW design and implementation on the road to achieving our desired results. there total 7 business and IT principles because most IT issues really involve business and IT equally.

Business Principles

Organizational Consensus(Agreements):

Make every effort to gain acceptance for, and minimize resistance to, the DW. If we can involve the stakeholders early in the process, they're much more likely to embrace the DW, use it and, hopefully, champion it to the rest of the company.

Data Integrity:

Data warehousing - of any business intelligence (BI) project - is a single version of the truth about organizational data. Any design for DW should begin by minimizing the chances for data replication and inconsistency. It should also promote data integration and standardization. Any reasonable methodology we can choose to achieve data integrity should work, as long as we can implement the methodology effectively with the end result in mind.

Implementation Efficiency:

To help meet the needs of company as early as possible and minimize project costs, the DW design should be straightforward and efficient to implement. This is basic fundamental design issue. we can design a technically DW, but if that design is difficult to understand or implement or doesn't meet user needs, our DW project will be in difficulty and cost can be increase.

User Friendliness:

Data warehouse should be user friendliness and ease to use.The DW should a common front-end across the company - based on user roles and security levels. And it should also be intuitive enough to have a minimal learning curve for most users.

Operational Efficiency:

Operational efficiency can be achieved only with a DW design that is easy to implement and maintain. A technically solution might be beautiful, but a practical, easy-to-maintain solution can give better results in the long run. Data warehouse should be easy to support to business change requests. Errors and exceptions should also be easy to remedy, and support costs should be moderate over the life of the DW.

IT Principles

Scalability:

Scalability is often a big problem with DW design. The solution is to build in scalability from the start. Choose toolsets and platforms that support future expansions of data volumes and types as well as changing business requirements.

Compliance with IT Standards:

DW design should compliance with IT standards and can take advantage of skill sets of IT and business users.

These principles, can help in a better position to recognize and address potential problems before they turn into project killers but won't guarantee we will always achieve our desired results in designing and implementing DW.

Principles of Dimensional Modeling

Dimensional modeling is the name of a logical design technique often used for data warehouses. DM is the only viable technique for databases that are designed to support end-user queries in a data warehouse. It is different from, and contrasts with, entity-relation modeling. ER is very useful for the transaction capture and the data administration phases of constructing a data warehouse, but it should be avoided for end-user delivery. This paper explains the dimensional modeling and how dimensional modeling technique varies/ contrasts with ER models.

Dimensional Modeling(DM):

Dimensional Modeling is a favorite modeling technique in data warehousing. DM is a logical design technique that seeks to present the data in a standard, intuitive framework that allows for high-performance access. It is inherently dimensional, and it adheres to a discipline that uses the relational model with some important restrictions. Every dimensional model is composed of one table with a multipart key, called the fact table, and a set of smaller tables called dimension tables. Each dimension table has a single-part primary key that corresponds exactly to one of the components of the multipart key in the fact table. This characteristic “star-like” structure is often called a star join. A fact table, because it has a multipart primary key made up of two or more foreign keys, always expresses a many-to-many relationship. The most useful fact tables also contain one or more numerical measures, or “facts,” that occur for the combination of keys that define each record. Dimension tables, by contrast, most often contain descriptive textual information. Dimension attributes are used as the source of most of the interesting constraints in data warehouse queries, and they are virtually always the source of the row headers in the SQL answer set. Dimension Attributes are the various columns in a dimension table. In the Location dimension, the attributes can be Location Code, State, Country, Zip code. Generally the Dimension Attributes are used in report labels, and query constraints such as where Country=’UK’. The dimension attributes also contain one or more hierarchical relationships. Before designing a data warehouse, one must decide upon the subjects.

Contrast with E-R:

In DM, a model of tables and relations is constituted with the purpose of optimizing decision support query performance in relational databases, relative to a measurement or set of measurements of the outcomes of the business process being modeled. In contrast, conventional E-R models are constituted to remove redundancy in the data model, to facilitate retrieval of individual records having certain critical identifiers, and therefore, optimize On-line Transaction Processing (OLTP) performance.

In a DM, the grain of the fact table is usually a quantitative measurement of the outcome of the business process being analyzed. The dimension tables are generally composed of attributes measured on some discrete category scale that describe, qualify, locate, or constrain the fact table quantitative measurements. Ralph Kimball views that the data warehouse should always be modeled using a DM/star schema. Indeed Kimball has stated that while DM/star schemas have the advantages of greater understandability and superior performance relative to E-R models, their use involves no loss of information, because any E-R model can be represented as a set of DM/star schema models without loss of information. In E-R models, normalization through addition of attributive and sub-type entities destroys the clean dimensional structure of star schemas and creates “snowflakes,” which, in general, slow browsing performance. But in star schemas, browsing performance is protected by restricting the formal model to associative and fundamental entities, unless certain special conditions exist (The key to understanding the relationship between DM and ER is that a single ER diagram breaks down into multiple DM diagrams. The ER diagram does itself a disservice by representing on one diagram multiple processes that never coexist in a single data set at a single consistent point in time. It’s no wonder the ER diagram is overly complex. Thus the first step in converting an ER diagram to a set of DM diagrams is to separate the ER diagram into its discrete business processes and to model each one separately. The dimensional model has a number of important data warehouse advantages that the ER model lacks. The dimensional model is a predictable, standard framework. Report writers, query tools, and user interfaces can all make strong assumptions about the dimensional model to make the user interfaces more understandable and to make processing more efficient.

The wild variability of the structure of ER models means that each data warehouse needs custom, handwritten and tuned SQL. It also means that each schema, once it is tuned, is very vulnerable to changes in the user’s querying habits, because such schemas are asymmetrical. By contrast, in a dimensional model all dimensions serve as equal entry points to the fact table. Changes in users’ querying habits don’t change the structure of the SQL or the standard ways of measuring and controlling performance

Conclusion:

It can be concluded that dimensional modeling is the only viable technique for designing end-user delivery databases. ER modeling defeats end-user delivery and should not be used for this purpose. ER modeling does not really model a business; rather, it models the micro relationships among data elements

Thursday, February 25, 2010

Data Warehouse Design & ETL Concepts

Data warehouses and data marts are built on dimensional data modeling where fact tables are connected with dimension tables. This is most useful for users to access data since a database can be visualized as a cube of several dimensions.

Dimensional Data Modeling

Dimensional Data Modeling comprises of one or more dimension tables and fact tables.

Dimensional Data Modeling is used for calculating summarized data. For example, sales data could be collected on a daily basis and then be aggregated to the week level, the week data could be aggregated to the month level, and so on.

Dimension Tables

Dimension table is one that describes the business entities of an enterprise, represented as hierarchical, categorical information such as time, departments, locations, and products. Dimension tables are sometimes called lookup or reference tables.

For example, Product dimension table will store information about products(Product Category, Product Sub Category, Product and Product Features) and location dimension table will store information about location( country, state, county, city, zip.

Fact Tables

The centralized table in a star schema is called as FACT(measures) table. A fact table typically has two types of columns: those that contain facts and those that are foreign keys to dimension tables. The primary key of a fact table is usually a composite key that is made up of all of its foreign keys.

For example Sales Amount can be the measure for any business.

Measure Types

Additive - Measures that can be added across all dimensions.

Non Additive - Measures that cannot be added across all dimensions.

Semi Additive - Measures that can be added across few dimensions and not with others.

Star Schema

Star Schema is a relational database schema for representing multidimensional data. It is the simplest form of data warehouse schema that contains one or more dimensions and fact tables. It is called a star schema because the entity-relationship diagram between dimensions and fact tables resembles a star where one fact table is connected to multiple dimensions. The center of the star schema consists of a large fact table and it points towards the dimension tables. The advantage of star schema is slicing down, performance increase and easy understanding of data.

Snowflake Schema

A snowflake schema is a term that describes a star schema structure normalized through the use of outrigger tables. Dimension table hierarchies are broken into simpler tables.

ETL Concept

Extraction, transformation, and loading. ETL refers to the methods involved in accessing and manipulating source data and loading it into target database.

The first step in ETL process is mapping the data between source systems and target database(data warehouse or data mart). The second step is cleansing of source data in staging area. The third step is transforming cleansed source data and then loading into the target system.

Note that ETT (extraction, transformation, transportation) and ETM (extraction, transformation, move) are sometimes used instead of ETL.

Glossary of ETL

Source System

A database, application, file, or other storage facility from which the data in a data warehouse is derived.

Mapping

The definition of the relationship and data flow between source and target objects.

Metadata

Data that describes data and other structures, such as objects, business rules, and processes. For example, the schema design of a data warehouse is typically stored in a repository as metadata, which is used to generate scripts used to build and populate the data warehouse. A repository contains metadata.

Staging Area

A place where data is processed before entering the warehouse.

Cleansing

The process of resolving inconsistencies and fixing the anomalies in source data, typically as part of the ETL process.

Transformation

The process of manipulating data. Any manipulation beyond copying is a transformation. Examples include cleansing, aggregating, and integrating data from multiple sources.

Transportation

The process of moving copied or transformed data from a source to a data warehouse.

Target System

A database, application, file, or other storage facility to which the "transformed source data" is loaded in a data warehouse.

Monday, February 22, 2010

Business Intelligence Overview - Part I

Business intelligence is the art of gaining business advantage from data. It is the use of an organization's disparate data to provide meaningful information and analysis to employees, customers, suppliers, and partners for more effective decision making.

Business Intelligence Models are based on multi dimensional analysis and key performance indicators (KPI) of an enterprise.

Why Is It Important?

· Real, Actionable Data for Decision-Making

· Greater Returns on Investment

· Higher Level of Member Service

· Business Insight and Information-Sharing

· the best possible utilization of resources

· Competitor analysis

· Key client planning

How does BI Works?

Uses of Business Intelligence:

Business Intelligence is used to answer the organization queries like:

• Who are my best and worst customers (and therefore, where should I concentrate my future sales efforts)

• What parameters affect my sales (Is there a brilliant sales person? Has a campaign been successful?)

• What advantages does my business offer customers, as compared with the competition?

• Where are we making/losing money (in terms of geography, product line, campaigns, resources etc.)

• What is the net income, expenses, gross profit, and net profit for this quarter, semester and year?

Business Intelligence Solution Architecture:

Data Warehouse Concepts

What is Data Warehouse?

A data warehouse is collection of historical data; it is subject oriented data, integrated, time variant and non volatile data for decision making process. Following are key points of data warehouse:

· Data warehouse is a relational and multinational database.

· Designed for query and analysis rather than transaction process.

· Data warehouse usually historical data and derived from online transaction data.

· Data Stored in Demoralized form

· Data warehouse environment often consist an ETL solution, OLAP design.

· The data warehouse is nothing more than the union of all the constituent data marts.

Elements of the data warehouse:

Source System: Multiple Sources, Excel, People Soft, RDBMS, Flat File and SAP etc.

Data Staging Area: ETL- Extraction, Transformation and Loading.

Presentation Server: Physical Data Warehouse.

Dimensional Modeling: Alternative of E/R Modeling, designed based on Dimension and Fact table.

Business Process: A business process is usually a set of activities like "order processing" or "customer pipeline management," but business processes can overlap, and certainly the definition of an individual business process will evolve over time.

Data Marts: Designed for particular line of business activity like Sales, Marketing and Finance etc. A data mart is a complete “pie-wedge” of the overall data warehouse pie.

Operation Data Store: ODS is more than the real enterprise data warehouse, data is refreshed in near time and used for routine business activity.

On-Line Analytic Process: Online Analytical Processing is an approach that helps organization to take advantages of DATA. Technically, OLAP cube allows one to analyze data across multiple dimensions by providing multidimensional view of aggregated, grouped data.

Relation OLAP: ROLAP stands for Relational Online Analytical Process that provides multidimensional analysis of data, stored in a Relational database.

Multidimensional OLAP: MOLAP provides the analysis of data stored in a multi-dimensional data cube.

Hybrid OLAP: HOLAP a combination of both ROLAP and MOLAP can provide multidimensional analysis simultaneously of data stored in a multidimensional database and in a relational database

Desktop or Database OLAP: DOLAP provide multidimensional analysis locally in the client machine on the data collected from relational or multidimensional database servers.

End User Application: Application which provides decision making information in targeted format. Like Excel sheet and Graphical etc.

End User Data Access Tool: Client of the data ware house to access and review the data warehouse information.

Metadata: All of the information in the data warehouse environment that is not the actual data itself.

Sunday, February 21, 2010

Dynamic Management View in SQL Server 2005

The Dynamic management View in SQL server 2005 provides a window into what's going on inside SQL Server. They can provide information on what's currently happening inside the server as well as the objects it's storing. They are designed to be used instead of system tables and the various functions provided in SQL Server 2000.

The Dynamic Management Views are actually composed of both views and table-valued functions. Some apply to the entire server and are stored in the master database. Others are specific to each database. All are stored in the sys schema. They all start with dm_ in the name. They have been broken up into twelve categories:

1. Common Language Runtime Related Dynamic Management Views:

2. I/O Related Dynamic Management Views and Functions

3. Database Mirroring Related Dynamic Management Views

4. Query Notifications Related Dynamic Management Views

5. Database Related Dynamic Management Views

6. Replication Related Dynamic Management Views

7. Execution Related Dynamic Management Views and Functions

8. Service Broker Related Dynamic Management Views

9. Full-Text Search Related Dynamic Management Views

10. SQL Operating System Related Dynamic Management Views

11. Index Related Dynamic Management Views and Functions

12. Transaction Related Dynamic Management Views and Functions

Few are very common views:

Sessions (To view current session):

SELECT session_id, login_name, last_request_end_time, cpu_time

FROM sys.dm_exec_sessions

WHERE session_id >= 51

GO

Connections (To view current connections):

SELECT connection_id, session_id, client_net_address, auth_scheme

FROM sys.dm_exec_connections

GO

Request (To view current Request):

SELECT session_id, status, command, sql_handle, database_id

FROM sys.dm_exec_requests

WHERE session_id >= 51

GO

SQL Text (To view current SQL text):

SELECT st.text

FROM sys.dm_exec_requests r

CROSS APPLY sys.dm_exec_sql_text(sql_handle) AS st

WHERE r.session_id = 56

GO

Security:

In order to query these views a user needs specific permissions granted. To view the server-wide DMVs the user must be granted the VIEW SERVER STATE on the server. Administrator can give the permission using following statement:

GRANT VIEW SERVER STATE to username

Thursday, February 18, 2010

Data modeling

Data models document entities (the persons, places and things [product, warehouse, partner etc.] an organization encounters in the course of business); the relationships of entities (e.g. employee WORKS in warehouse, MANAGES product and SHIPS to partner); and the attributes of entities (description, order number, address, account balance etc.).

There are three common types of data models. Conceptual data models define and describe business concepts at a high level for stakeholders addressing a business challenge. Logical data models are more detailed and describe entities, attributes and relationships in business terms. Physical data models define database objects, schema and the actual columns and tables of data that will be created in the database.

Like the blueprint of a building, a data model is the design specification for a database. Data modeling can be helped by off the shelf data models that can be adapted to a specific use. But data architects warn that without proper time and attention to "design before you build," organizations face inaccurate reporting, incorrect data, costly remediation and difficulty in meeting new user requirements.