Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Tuesday, August 12, 2014

SSISDB Catalog – SQL Server 2012 best feature to improve SSIS project deployment

What is Catalog?

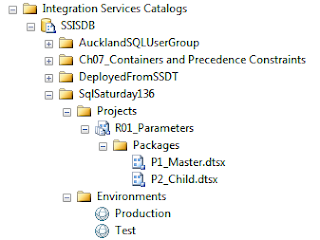

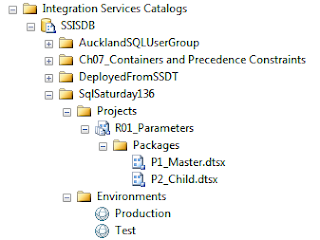

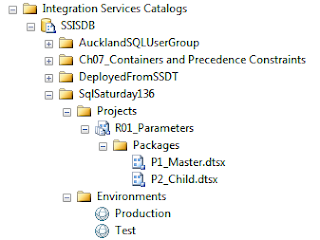

SSIS Catalog is a new repository model for Integration Services, which introduced from SQL Server 2012. This new repository model brings a lot of abilities for developers, testers and administrators. SQL Server Integration Services projects in SQL 2012 will be deployed to the SSIS catalog. So as the first step you need to create SSIS Catalog. Name of SSIS catalog database is SSISDB.

SSIS Catalog has it's own node in SSMS:

Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Project Based deployment:

This is another advantage of catalog; in SSIS 2008 or earlier packages were the core of deployment, but from 2012 project is the central configuration point for whole packages.

Versioning:

Validation

Previous versions of SSIS (2008R2 or earlier), were supported validation, but as a part in execution time. In 2012 with help of SSIS Catalog validation can be done as a separate step for packages or project.

Execution & Logging

There are four logging level is in SSIS 2012; None, Basic, Performance and Verbose. Each of these logging modes will support number of events that will be logged into catalog database at the time of execution.

Logging can be enabled in the package execution GUI, and no extra work is needed during package development and this is one of advantages of SSIS 2012 rather than previous versions.

Note: There are a lot of improvements in execution of projects and logging which I can share in some technical session.

DATA Taps are new features in SSIS 2012 which provides ability to log whole data stream in one of data flow's data paths and the best part of story is that you can add and remove data taps to a package at the time of execution.

Environments

When you deploy packages and projects to a server, you may want to run those projects in different environments. For example one for Test and another for Production. SSIS Catalog provided new repository for environments, where you can create multiple environments for example for test and production. In each environment you can assign different values to project parameters. Environments can be assigned to projects or packages at the time of execution.

Reports

There are 4 main type of reports gathered by SSIS Catalog with help of logging modes; Execution, Validation, Operation and Connection reports. Reports are based on Reporting Services (SSRS) and shows details of execution or validation of packages and projects.

Permission

Permission can be applied on each folder, project, environment and operation. SQL Server roles can be selected and read, execute or other permissions can be granted or revoked for them. all permission details will be stored in tables in SSISDB catalog database.

The new 2012 version of SSIS provided much more powerful permission style than earlier versions, and is much more suitable for enterprise real-world environments.

Report Layout

To Create SSIS Catalog you first need to install SQL Server 2012 and after installation open SSMS and right click on "Integration Services Catalogs" node and select "Create Catalog"

See you soon with new post.

Sunday, July 7, 2013

How to handle flat file row delimiter and column delimiter issues in SSIS?

There are scenarios when the source file gets

generated with different row delimiters from different systems and results into

error when reading within the package. Handle dynamically row delimiter

issue we can use this will generic and permanent fix, so suppose if in future

we are changing row delimiter no need to change any package and re-test but

this can be managed only at time initial design time. Once package development done

with any one row delimiter (CRLF, LF, CR) we need to use different approach to

provide robust solution to avoid rework on each package. There are 3 approaches

to manage this issue:

Approach ‘A’

Use BULK

INSERT TASK instead of dataflow and flat file source. In

the BULK INSERT TASK, you can set ROW TERMINATOR and FIELD TERMINATOR

expression properties, and this will work correctly.

Approach ‘B’

We do some pretty intense

transformation of the data, so BULK INSERT was not an option. So we need

to some different approach. First we need to identifying the delimiter and then

reading the file within the respective Data Flow Tasks. Let’s see with an

example:

Example: A file gets

generated with a variable row delimiter which sometimes is “LF” and sometimes

is “CR”. The column delimiter of this file is always fixed i.e. “TAB”. So, to

read this file successfully with both the delimiters we need to follow the

below steps:

Step 1: – Create a

variable “varRowDelimiter” to store the delimiter value and a variable

“varSourceFile” which contains the path of the file.

Step 2: – Create a Script task which will read the

source file and identifies the row delimiter used in the file. The task will

use both of the above declared variables: ‘varSourceFile’ as ReadOnly

variable and ‘varRowDelimiter’ as ReadWrite variable

.

Inside the Editor we will write the code to

read the source file, identify the delimiter and store its value in the

variable. One thing which one should consider while writing this code is: how

huge your source files are going to be? As reading file will consume

memory, the files with huge sizes will affect the performance and may result

into errors such as Out of Memory. Mostly, I have seen that developers simply

use “ReadtoEnd” property even if the purpose of reading the file is not related

to reading the complete data. ReadtoEnd reads the complete file till end and

stores the entire data into memory thus resulting into high memory usage and a

bit of slow performance, thus it should be used only when there is a need to

read all the input to the end of the stream.

Here, I will be using the simple read method in

a loop in which I will read some characters at a time and as soon as I find the

delimiter, I will exit the loop.You can also read the files in fixed block

sizes using Roadblock method and can exit the loop when you have the delimiter

value.

Step 3 – Now,

create two flat file connection managers – one with the “LF” Row Delimiter and

one with the “CR” Row Delimiter and incorporate them into the respective Data

flow tasks.

Step 4: Create two Precedence constraints and connect them from

the Script Task to the Data flow tasks with the respective Expressions.

Now, just run the package for source file with

both of the delimiters and use the results as required.

The above example can be modified to check for

other delimiters as well or to cater other scenarios which requires the

delimiters identification at run time.

Approach ‘C’

This is agile approach and very useful, when we

have already delivered flat file reading solution with one delimiter and in

situation where we are can receive flat file with multiple row delimiters.

We need to create a new package will be

executed before the set of packages to load flat file in database (Destination).

This package will identify row delimiter of flat file and will replace with the

row delimiter we are using default in packages. For example if packages

developed with {CR}{LF} but files received are having {LF} then we need to

replace {LF} to {CR}{LF}.

Step 1: – Create a

variable “varReplaceRowDelimiter” to replace the delimiter value, variable “varFindRowDelimiter”

to find the existing delimiter value and a variable “varSourceFile”

which contains the path of the file.

Step 2: – Create a Script task which will read the

source file and identifies the row delimiter used in the file. The task will

use all of the above declared variables: ‘varSourceFile’, “varReplaceRowDelimiter”

, “varFindRowDelimiter” as ReadOnly

variable.

Inside the Editor we will write the code to

read the source file, identify the delimiter store its value in the variable

and replace with delimiter store its value in the variable. See the below code:

'This method is called when this script task executes in

the control flow.

'Before returning from this method, set the value of

Dts.TaskResult to indicate success or failure.

'To open Help, press F1.

Public Sub Main()

'

' Add your code here

'

Dim pk As New Package

Dim file As New System.IO.StreamReader("C:\users\ABC\Downloads\test.txt")

Dim data As String

data

= file.ReadToEnd()

data

= data.Replace( vbLf , vbCrLf)

file.Close()

Dim writer As New System.IO.StreamWriter("C:\Users\ABC\Downloads\test.txt", False)

writer.Write(data)

writer.Flush()

writer.Close()

Dts.TaskResult = ScriptResults.Success

End Sub

Use variables as below:

"C:\users\ABC\Downloads\test.txt") = Dts.Variables("@[User::varSourceFile]").Value.ToString()

vbLf = Dts.Variables("@[User::varFindRowDelimiter]").Value.ToString()

vbCrLf = Dts.Variables("@[User::varReplaceRowDelimiter]").Value.ToString()

One thing which one should consider while

writing this code is: how huge your source files are going to be? As

reading file will consume memory, the files with huge sizes will affect the

performance and may result into errors such as Out of Memory. We need to handle

buffer location through config file.

Wednesday, August 29, 2012

SQL Server – Transaction locks, Isolation Levels and SSIS Transaction

Transaction:

Lets discuss real work scenario, when you given something to me and I take it; then it is a transaction. When you withdrawal money from ATM and you received then it is a transaction but what will happen when you withdrawal money from ATM and account still showing same balance as it was before withdrawal. What will happen when you and you wife both withdrawing all your money from the joint account at the same time from different ATMs. So there must be some methodology to keep track on all such natural disaster conditions.

To achieve the above thought in the database system we have locking mechanism. It acts like this, suppose there is a room and only one person can get access at time with provided user key and password but another person wants same room during same time with different purpose. So the security interface should be manageable. We can conclude that the security system must provide different types of security code or passwords to the corresponding person. Let’s have a deeper look on this. Suppose you are doing a transaction for withdrawing money from the ATM machine and at the same time the bank manager is doing a routine check up of your transaction which is totally a different operation and suppose at the same time the bank teller is checking your account for the remaining balance. All these operations are different but accessing the same entity or resource and that is your account information that is kept inside the database. Out of these operations only you are doing write operation in the database as you are withdrawing money and the remaining balance has to be updated in the database. So a proper security mechanism must be implemented here to ensure non-conflict or smooth going of these operations. Here the security can be ensured by putting locks (and of course the type of locks) for each type of operations, which means you are isolating the resources from other transactions that may hamper its consistency. Here comes the role of Isolation levels.

Let’s have look on Locks and type of Locks. Locks can be treated as a policy which will prevent you or a process to perform any action (that may conflict with other actions) on an object or resource if that object or resource is already occupied by any other process or user. It’s like you are going to propose someone who is already with someone else. So for such type of transaction, we have type of Locks.

Types of Locks

Shared Locks(S): This lock id useful when you are doing some read operations and no manipulation like write operations (Update/Delete/Insert). This will perform dirty reads.

Exclusive Locks(X): These locks are big possessive types. They are not compatible with any other locks means these locks will not work if some other locks are already there with the resource. This locks used for data-modifications.

Update Locks(U): This can be a mixture of or perfect combination of two locks (Shared and Exclusive). Let’s take an example: Suppose you are updating any particular record out of 100, so first you will search that particular record using shared lock and then you will update that record with exclusive lock. So here Shared lock transforms to exclusive lock. This avoids deadlocks to a great extent.

Intent Locks: Also called demand locks. There are used to establish a lock hierarchy. For example we are reading some data with shared lock and same time another user wants to modify data with exclusive lock but shared lock can be compatible with other shared lock as result any number of shared lock can be obtained on piece of data and hence the user with exclusive lock has to wait till the completion of all shared lock operations. To avoid this type of situation, intent locks are very useful. Here if the second user comes with intent exclusive lock, the no other transaction can grab a shared lock. Here it can claim the use of exclusive lock after first transaction completes.

There are basically three types of Intent Locks that are most popular:

• Intent Shared Lock(IS)

• Intent exclusive (IX)

• Shared with intent exclusive (SIX)

Schema Locks: This lock protect schema of database and deals with DDL command. There are two types of schema locks: • Schema Modification (Sch-M): Applied only when modifying structure • Schema Stability (Sch-S: Applied on only when query data and prevent execution of DDL command on the table.

Bulk Update Locks: This lock is useful when preforming bulk operation on the table. It will prevent any other type of normal T-SQL operations to be executed the table.

Lost Updates: It generally occurs when more the one transaction tries to update any specific record at a time. Example when one update successfully update data and second update accidently overwrites previous information called lost update.

Isolation Level:

Isolation levels in SQL Server control the way locking works between transactions. The Isolation levels are categorized depending on the type of locks it uses for a particular level. At lower level of isolation more users can access the same resource without any confliction, but they may face concurrency related issues such as dirty-reads and data inaccuracy (described below). At higher Isolation level, these types of issues can be eliminated but here only a limited no. of users can access the resource.

SQL Server 2008 supports the following isolation levels

• Read Uncommitted

• Read Committed (The default)

• Repeatable Read

• Serializable

• Snapshot

Before I run through each of these in detail you may want to create a new database to run the examples, run the following script on the new database to create the sample data.

Note: We also want to drop the TableName table and re-run this script before each example to reset the data.

GO

CREATE

TABLE TableName

(

Id

INT IDENTITY,

Col1

INT,

Col2

INT,

Col3

INT)

INSERT

INTO TableName(Col1,Col2,Col3)

SELECT

1,2,3

UNION

ALL SELECT 1,2,3

UNION

ALL SELECT 1,2,3

UNION

ALL SELECT 1,2,3

UNION

ALL SELECT 1,2,3

UNION

ALL SELECT 1,2,3 UNION ALL SELECT 1,2,3 Before we start each isolation level in details we need to understand two things:

Dirty Read: Quite often in database processing, we come across the situation where in one transaction can change a value, and a second transaction can read this value before the original change has been committed or rolled back. This is known as a dirty read scenario because there is always the possibility that the first transaction may rollback the change, resulting in the second transaction having read an invalid value.

Phantom Reads: Occur when an insert or delete action is performed against a row that belongs to a range of rows being read by a transaction Inconsistent analysis occurs when a second transaction accesses the same row several times and reads different data each time .

Read Uncommitted

This is the lowest isolation level there is. Read uncommitted causes no shared locks to be requested which allows you to read data that is currently being modified in other transactions. It also allows other transactions to modify data that you are reading. As you can probably imagine this can cause some unexpected results in a variety of different ways. For example data returned by the select could be in a half way state if an update was running in another transaction causing some of your rows to come back with the updated values and some not to. To see read uncommitted in action let’s run Query1 in one tab of Management Studio and then quickly run Query2 in another tab before Query1 completes.

BEGIN

TRAN

UPDATE

TableName SET Col1 = 3

WAITFOR

DELAY '00:00:10'

--ROLLBACK

--COMMIT

SELECT

* FROM TableName

SELECT

* FROM TableName WITH(NOLOCK)Read Committed

This is the default isolation level and means selects will only return committed data. Select statements will issue shared lock requests against data you’re querying this causes you to wait if another transaction already has an exclusive lock on that data. Once you have your shared lock any other transactions trying to modify that data will request an exclusive lock and be made to wait until your Read Committed transaction finishes.

BEGIN

TRAN

UPDATE

TableName SET Col1 = 3

where

Id <= 3

WAITFOR

DELAY '00:00:10'

--ROLLBACK

--COMMIT

--SET TRANSACTION ISOLATION LEVEL Read Committed

SELECT

* FROM TableName

DBCC

useroptionsThis is similar to Read Committed but with the additional guarantee that if you issue the same select twice in a transaction you will get the same results both times

SET

TRANSACTION ISOLATION LEVEL REPEATABLE READ

BEGIN

TRAN

SELECT

* FROM TableName

WAITFOR

DELAY '00:00:10'

SELECT

* FROM TableName

ROLLBACK

UPDATE

TableName SET Col1 = 1Serializable

This isolation level takes Repeatable Read and adds the guarantee that no new data will be added eradicating the chance of getting Phantom Reads.

SET

TRANSACTION ISOLATION LEVEL SERIALIZABLE

BEGIN

TRAN

SELECT

* FROM TableName

WAITFOR

DELAY '00:00:10'

SELECT

* FROM TableName

ROLLBACK

INSERT

INTO TableName(Col1,Col2,Col3)

VALUES

(100,100,100)This provides the same guarantees as Serializable. So what’s the difference? Using snapshot inserting or updating doesn’t block other queries the data touched by the snapshot transaction. But you need to enable snapshot on your database:

ALTER

DATABASE databasename SET

ALLOW_SNAPSHOT_ISOLATION

ON Summary

Now we have good idea how each of the different isolation levels work. We can see how the higher level isolation uses the less concurrency and the more blocking to the table. We should always try to use the lowest isolation level. I will recommended for read committed which is default isolation level in SQL server.

Monday, March 5, 2012

SQL Server Analysis Service Events - Useful Information

Microsoft Analysis Services Events

Date Event

1996-07-01 Microsoft opens new team to build an OLAP product, codenamed

Plato (permutation of letters from OLAP)

1996-07-15 Panorama Software delegation meets with Microsoft

1996-10-27 Microsoft announces acquisition of Panorama Software development team

1998-11 OLAP Services 7.0 (codename Sphinx) ships

2000-08 Analysis Services 2000 (codename Shiloh) ships

2001-11 XML for Analysis Software Development Kit 1.0 ships

2003-04 ADOMD.NET and XML for Analysis SDK 1.1 ship

2005-10-28 Analysis Services 2005 (codename Yukon) ships

2008-08-06 Analysis Services 2008 (codename Katmai) ships

2010-12-21 Analysis Services 2008 R2

2011-07-11 Analysis Services 2008 R2 – SP1

SQL Server Release History

Version Year Release Name Codename

1.0(OS/2)1989 SQL Server 1.0

(16bit)

1.1(OS/2)1991 SQL Server 1.1

(16bit) -

4.21 1993 SQL Server 4.21 SQLNT

(WinNT)

6.0 1995 SQL Server 6.0 SQL95

6.5 1996 SQL Server 6.5 Hydra

7.0 1998 SQL Server 7.0 Sphinx

- 1999 SQL Server 7.0

OLAP Tools Plato

8.0 2000 SQL Server 2000 Shiloh

8.0 2003 SQL Server 2000

64-bit Edition Liberty

9.0 2005 SQL Server 2005 Yukon

10.0 2008 SQL Server 2008 Katmai

10.25 2010 SQL Azure Matrix (aka CloudDB)

10.5 2010 SQL Server 2008 R2 Kilimanjaro (aka KJ)

11.0 2012 SQL Server 2012 Denali

Date Event

1996-07-01 Microsoft opens new team to build an OLAP product, codenamed

Plato (permutation of letters from OLAP)

1996-07-15 Panorama Software delegation meets with Microsoft

1996-10-27 Microsoft announces acquisition of Panorama Software development team

1998-11 OLAP Services 7.0 (codename Sphinx) ships

2000-08 Analysis Services 2000 (codename Shiloh) ships

2001-11 XML for Analysis Software Development Kit 1.0 ships

2003-04 ADOMD.NET and XML for Analysis SDK 1.1 ship

2005-10-28 Analysis Services 2005 (codename Yukon) ships

2008-08-06 Analysis Services 2008 (codename Katmai) ships

2010-12-21 Analysis Services 2008 R2

2011-07-11 Analysis Services 2008 R2 – SP1

SQL Server Release History

Version Year Release Name Codename

1.0(OS/2)1989 SQL Server 1.0

(16bit)

1.1(OS/2)1991 SQL Server 1.1

(16bit) -

4.21 1993 SQL Server 4.21 SQLNT

(WinNT)

6.0 1995 SQL Server 6.0 SQL95

6.5 1996 SQL Server 6.5 Hydra

7.0 1998 SQL Server 7.0 Sphinx

- 1999 SQL Server 7.0

OLAP Tools Plato

8.0 2000 SQL Server 2000 Shiloh

8.0 2003 SQL Server 2000

64-bit Edition Liberty

9.0 2005 SQL Server 2005 Yukon

10.0 2008 SQL Server 2008 Katmai

10.25 2010 SQL Azure Matrix (aka CloudDB)

10.5 2010 SQL Server 2008 R2 Kilimanjaro (aka KJ)

11.0 2012 SQL Server 2012 Denali

Thursday, March 1, 2012

So, what is the BI semantic Model (BISM)?

BISM is a marketing concept just like ‘UDM’ unified dimension modeling introduced with SQL server 2005. Many people were confused about ‘UDM’. It was just another name for an Analysis Services Cube, but it also represented the nebulous concept of Analysis Services being a semantic layer suitable for all your reporting needs; however people often thought it was a new product that somehow replaced Analysis Services cubes and got themselves tied in all sorts of knots as a result. Thankfully, this terminology died out pretty quickly as everyone started referring to Analysis Services as, well, Analysis Services.

Present days we can see a similar amount of confusion about the term ‘BI Semantic Model’ or BISM for many of the same reason; what is the BI semantic Model exactly? It is Analysis services 2012 plus Power Pivot. Let’s be clear here; it is not just the new Analysis Services tabular model, it’s not even a specific bit of software. Analysis Services 2012 consists of two parts, the Multidimensional Model which is the Analysis Services of Cubes and dimension that we already had in 2008 R2 and earlier version, and the new Tabular model which is the Analysis Services of Table, relationships, in-memory storage and column store. BISM refer to the both models plus Power Pivot, or rather it refers to the way that Analysis Services and Power Pivot can be used as a semantic layer on top of other data for reporting purpose.

So what’s the point of a term like BISM then if it doesn’t refer to something concrete? Why not just call Analysis Services “Analysis Service and Power Pivot “Power Pivot”. BISM is just marketing concept like ‘UDM’, for three reasons:

• A single term like BISM suggests that Analysis Services 2012 and Power Pivot are a single, cohesive product, whereas the tabular and Multidimensional Model are actually very different monsters. If you are going to be working with Analysis Services 2012 on a project the first decision, you will have to make is which type of model to use, and if you change your mind later you will have to start development from scratch and learn lot of new skills. We can think that one day the two models will merge but it won’t happen soon.

• Microsoft has currently identified that many people what do BI but due to the complexity of building Multidimensional models. The simplicity of Tabular Model goes long way to solving this problem; Tabular also replaced report builder model which were really just a semantic layer people didn’t like SSAS or had valid reason to stay with relational reporting.

• Calling something a “semantic layer” suggests that it’s a nice, light, thin, easy to implement layer on top of your relational data warehouse, with no data duplication involved. In actual fact anyone has used the multidimensional model will know you almost always use MOLAP storage which involves all the data being copied in Analysis Services; and I suspect when people start using the Tabular model they will be using it in Vertipaq mode (where again all the data gets copied into Analysis Services) rather than in DirectQuery mode (where all queries are translated to SQL which is then run against SQL Server).

This is my understanding about BISM and may be different with others understanding but I don’t think using the term BISM is useful in any technical context, just as the term UDM wasn’t in the past, because it is a blanket term for several different things.

Present days we can see a similar amount of confusion about the term ‘BI Semantic Model’ or BISM for many of the same reason; what is the BI semantic Model exactly? It is Analysis services 2012 plus Power Pivot. Let’s be clear here; it is not just the new Analysis Services tabular model, it’s not even a specific bit of software. Analysis Services 2012 consists of two parts, the Multidimensional Model which is the Analysis Services of Cubes and dimension that we already had in 2008 R2 and earlier version, and the new Tabular model which is the Analysis Services of Table, relationships, in-memory storage and column store. BISM refer to the both models plus Power Pivot, or rather it refers to the way that Analysis Services and Power Pivot can be used as a semantic layer on top of other data for reporting purpose.

So what’s the point of a term like BISM then if it doesn’t refer to something concrete? Why not just call Analysis Services “Analysis Service and Power Pivot “Power Pivot”. BISM is just marketing concept like ‘UDM’, for three reasons:

• A single term like BISM suggests that Analysis Services 2012 and Power Pivot are a single, cohesive product, whereas the tabular and Multidimensional Model are actually very different monsters. If you are going to be working with Analysis Services 2012 on a project the first decision, you will have to make is which type of model to use, and if you change your mind later you will have to start development from scratch and learn lot of new skills. We can think that one day the two models will merge but it won’t happen soon.

• Microsoft has currently identified that many people what do BI but due to the complexity of building Multidimensional models. The simplicity of Tabular Model goes long way to solving this problem; Tabular also replaced report builder model which were really just a semantic layer people didn’t like SSAS or had valid reason to stay with relational reporting.

• Calling something a “semantic layer” suggests that it’s a nice, light, thin, easy to implement layer on top of your relational data warehouse, with no data duplication involved. In actual fact anyone has used the multidimensional model will know you almost always use MOLAP storage which involves all the data being copied in Analysis Services; and I suspect when people start using the Tabular model they will be using it in Vertipaq mode (where again all the data gets copied into Analysis Services) rather than in DirectQuery mode (where all queries are translated to SQL which is then run against SQL Server).

This is my understanding about BISM and may be different with others understanding but I don’t think using the term BISM is useful in any technical context, just as the term UDM wasn’t in the past, because it is a blanket term for several different things.

Monday, January 9, 2012

Mixed datatypes in Excel Cell problem in SSIS

Case

My column in Excel contains mixed data types (strings and numbers). The string values are shown as NULL. How can I get all values?

Solution

The Excel driver reads the first 8 rows to determine the datatype. If the majority of the rows are numbers it will choose a numeric datatype instead of a string data type and visa versa.

So if your Excel sheet looks like this. The column start with number then gets DT_R8 and column start with string gets DT_WSTR.

TypeGuessRows

You can change the default of checking 8 rows (1 to16) by changing the windows registry, If you want to change that number... Start the Windows Registry-editor and search for "TypeGuessRows". The place of that key depends on the version of Windows and Office. Examples:

HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\Jet\4.0\Engines\Excel

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Jet\3.0\Engines\Excel

Note: For performance reasons, setting the TypeGuessRows value to zero (0) is not recommended (especially when your Excel table is very large). With this zero value Excel will scan all records in your table to determine the type of data in each column.

Steps needs to be followed:

To change the value of TypeGuessRows, use these steps:

1. On the Start menu, click Run. In the Run dialog box, type Regedt32, and then click OK.

2. Open the following key in the Registry editor:

a. HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Jet\4.0\Engines\Excel

Note For 64-bit systems, the corresponding key is as follows:

b. HKLM\SOFTWARE\wow6432node\microsoft\jet\4.0\engines\excel

3. Double-click TypeGuessRows.

4. In the DWORD editor dialog box, click Decimal under Base(Based on requirement). Type a value between 0 and 16, inclusive, for Value data.

5. Click OK, and then exit the Registry Editor.

Import Mode

If you want to get the data of all rows, regardless the datatypes in Excel, you should add the string ;IMEX=1 to the Excel Connection String. Changing to Import Mode (0 is Export mode, 1 is Import mode and 2 is Linked mode) will result in getting everything (including numbers) as a string. Go to the properties of the Excel Connection manager and change the Connection String property:

Provider=Microsoft.Jet.OLEDB.4.0;Data Source=D:\MyExcelFile1.xls;Extended Properties="EXCEL 8.0;HDR=YES;IMEX=1";

Provider=Microsoft.ACE.OLEDB.12.0;Data Source=D:\MyExcelFile.xlsx;Extended Properties="Excel 12.0 XML;HDR=YES;IMEX=1";

My column in Excel contains mixed data types (strings and numbers). The string values are shown as NULL. How can I get all values?

Solution

The Excel driver reads the first 8 rows to determine the datatype. If the majority of the rows are numbers it will choose a numeric datatype instead of a string data type and visa versa.

So if your Excel sheet looks like this. The column start with number then gets DT_R8 and column start with string gets DT_WSTR.

TypeGuessRows

You can change the default of checking 8 rows (1 to16) by changing the windows registry, If you want to change that number... Start the Windows Registry-editor and search for "TypeGuessRows". The place of that key depends on the version of Windows and Office. Examples:

HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\Jet\4.0\Engines\Excel

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Jet\3.0\Engines\Excel

Note: For performance reasons, setting the TypeGuessRows value to zero (0) is not recommended (especially when your Excel table is very large). With this zero value Excel will scan all records in your table to determine the type of data in each column.

Steps needs to be followed:

To change the value of TypeGuessRows, use these steps:

1. On the Start menu, click Run. In the Run dialog box, type Regedt32, and then click OK.

2. Open the following key in the Registry editor:

a. HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Jet\4.0\Engines\Excel

Note For 64-bit systems, the corresponding key is as follows:

b. HKLM\SOFTWARE\wow6432node\microsoft\jet\4.0\engines\excel

3. Double-click TypeGuessRows.

4. In the DWORD editor dialog box, click Decimal under Base(Based on requirement). Type a value between 0 and 16, inclusive, for Value data.

5. Click OK, and then exit the Registry Editor.

Import Mode

If you want to get the data of all rows, regardless the datatypes in Excel, you should add the string ;IMEX=1 to the Excel Connection String. Changing to Import Mode (0 is Export mode, 1 is Import mode and 2 is Linked mode) will result in getting everything (including numbers) as a string. Go to the properties of the Excel Connection manager and change the Connection String property:

Provider=Microsoft.Jet.OLEDB.4.0;Data Source=D:\MyExcelFile1.xls;Extended Properties="EXCEL 8.0;HDR=YES;IMEX=1";

Provider=Microsoft.ACE.OLEDB.12.0;Data Source=D:\MyExcelFile.xlsx;Extended Properties="Excel 12.0 XML;HDR=YES;IMEX=1";

Friday, December 30, 2011

Types of Dimensions

The Type property setting provides information about the contents of a dimension to server and client applications. In some cases, the Type setting only provides guidance for client applications and is optional. In other cases, such as Accounts or Time dimensions, the Type property settings for the dimension and its attributes determine specific server-based behaviours and may be required to implement certain behaviours in the cube. For example, the Type property of a dimension can be set to Accounts to indicate to client applications that the standard dimension contains account attributes.

The default setting for the dimension type is Regular, which makes no assumptions about the contents of the dimension. This is the default setting for all dimensions when you initially define a dimension unless you specify Time when defining the dimension using the Dimension Wizard. You should also leave Regular as the dimension type if the Dimension Wizard does not list an appropriate type for Dimension type.

Available Dimension Types

The following table describes the dimension types available in Microsoft SQL Server Analysis Services.

Regular - A dimension whose type has not been set to a special dimension type.

Time - A dimension whose attributes represent time periods, such as years,

semesters, quarters, months, and days.

Organization - A dimension whose attributes represent organizational information,

such as employees or subsidiaries.

Geography - A dimension whose attributes represent geographic information, such

as cities or postal codes.

BillOfMaterials-A dimension whose attributes represent inventory or manufacturing

information, such as parts lists for products.

Accounts - A dimension whose attributes represent a chart of accounts for

financial reporting purposes.

Customers - A dimension whose attributes represent customer or contact

information.

Products - A dimension whose attributes represent product information.

Scenario - A dimension whose attributes represent planning or strategic

analysis information.

Quantitative - A dimension whose attributes represent quantitative information.

Utility - A dimension whose attributes represent miscellaneous information.

Currency - This type of dimension contains currency data and metadata.

Rates - A dimension whose attributes represent currency rate information.

Channel - A dimension whose attributes represent channel information.

Promotion - A dimension whose attributes represent marketing promotion

information.

According to dimension modeling there are different types of dimnesions:

Conformed dimension

A conformed dimension is a set of data attributes that have been physically implemented in multiple database tables using the same structure, attributes, domain values, definitions and concepts in each implementation. A conformed dimension cuts across many facts.

Dimensions are conformed when they are either exactly the same (including keys) or one is a perfect subset of the other. Most important, the row headers produced in the answer sets from two different conformed dimensions must be able to match perfectly.

Conformed dimensions are either identical or strict mathematical subsets of the most granular, detailed dimension. Dimension tables are not conformed if the attributes are labeled differently or contain different values. Conformed dimensions come in several different flavors. At the most basic level, conformed dimensions mean exactly the same thing with every possible fact table to which they are joined. The date dimension table connected to the sales facts is identical to the date dimension connected to the inventory facts.

Junk dimension

A junk dimension is a convenient grouping of typically low-cardinality flags and indicators. By creating an abstract dimension, these flags and indicators are removed from the fact table while placing them into a useful dimensional framework. A Junk Dimension is a dimension table consisting of attributes that do not belong in the fact table or in any of the existing dimension tables. The nature of these attributes is usually text or various flags, e.g. non-generic comments or just simple yes/no or true/false indicators. These kinds of attributes are typically remaining when all the obvious dimensions in the business process have been identified and thus the designer is faced with the challenge of where to put these attributes that do not belong in the other dimensions.

One solution is to create a new dimension for each of the remaining attributes, but due to their nature, it could be necessary to create a vast number of new dimensions resulting in a fact table with a very large number of foreign keys. The designer could also decide to leave the remaining attributes in the fact table but this could make the row length of the table unnecessarily large if, for example, the attributes is a long text string.

The solution to this challenge is to identify all the attributes and then put them into one or several Junk Dimensions. One Junk Dimension can hold several true/false or yes/no indicators that have no correlation with each other, so it would be convenient to convert the indicators into a more describing attribute. An example would be an indicator about whether a package had arrived, instead of indicating this as “yes” or “no”, it would be converted into “arrived” or “pending” in the junk dimension. The designer can choose to build the dimension table so it ends up holding all the indicators occurring with every other indicator so that all combinations are covered. This sets up a fixed size for the table itself which would be 2^x rows, where x is the number of indicators. This solution is appropriate in situations where the designer would expect to encounter a lot of different combinations and where the possible combinations are limited to an acceptable level. In a situation where the number of indicators are large, thus creating a very big table or where the designer only expect to encounter a few of the possible combinations, it would be more appropriate to build each row in the junk dimension as new combinations are encountered. To limit the size of the tables, multiple junk dimensions might be appropriate in other situations depending on the correlation between various indicators.

Junk dimensions are also appropriate for placing attributes like non-generic comments from the fact table. Such attributes might consist of data from an optional comment field when a customer places an order and as a result will probably be blank in many cases. Therefore the junk dimension should contain a single row representing the blanks as a surrogate key that will be used in the fact table for every row returned with a blank comment field.

A dimension key, such as a transaction number, invoice number, ticket number, or bill-of-lading number, that has no attributes and hence does not join to an actual dimension table. Degenerate dimensions are very common when the grain of a fact table represents a single transaction item or line item because the degenerate dimension represents the unique identifier of the parent. Degenerate dimensions often play an integral role in the fact table's primary key.

Role-playing dimensions

Dimensions are often recycled for multiple applications within the same database. For instance, a "Date" dimension can be used for "Date of Sale", as well as "Date of Delivery", or "Date of Hire". This is often referred to as a "role-playing dimension".

Degenerated Dimension (DD):

An item that is in the fact table but is stripped off of its description, because the description belongs in dimension table, is referred to as Degenerated Dimension. Since it looks like dimension, but is really in fact table and has been degenerated of its description, hence is called degenerated dimension. Now coming to the slowly changing dimensions (SCD) and Slowly Growing Dimensions (SGD): I would like to classify them to be more of an attributes of dimensions its self.

Although other might disagree to this view but Slowly Changing Dimensions are basically those dimensions whose key value will remain static but description might change over the period of time. For example, the product id in a companies, product line might remain the same, but the description might change from time to time, hence, product dimension is called slowly changing dimension.

Lets consider a customer dimension, which will have a unique customer id but the customer name (company name) might change periodically due to buy out / acquisitions, Hence, slowly changing dimension, as customer number is static but customer name is changing, However, on the other hand the company will add more customers to its existing list of customers and it is highly unlikely that the company will acquire astronomical number of customer over night (wouldn’t the company CEO love that) hence, the customer dimension is both a Slowly changing as well as slowly growing dimension.

The default setting for the dimension type is Regular, which makes no assumptions about the contents of the dimension. This is the default setting for all dimensions when you initially define a dimension unless you specify Time when defining the dimension using the Dimension Wizard. You should also leave Regular as the dimension type if the Dimension Wizard does not list an appropriate type for Dimension type.

Available Dimension Types

The following table describes the dimension types available in Microsoft SQL Server Analysis Services.

Regular - A dimension whose type has not been set to a special dimension type.

Time - A dimension whose attributes represent time periods, such as years,

semesters, quarters, months, and days.

Organization - A dimension whose attributes represent organizational information,

such as employees or subsidiaries.

Geography - A dimension whose attributes represent geographic information, such

as cities or postal codes.

BillOfMaterials-A dimension whose attributes represent inventory or manufacturing

information, such as parts lists for products.

Accounts - A dimension whose attributes represent a chart of accounts for

financial reporting purposes.

Customers - A dimension whose attributes represent customer or contact

information.

Products - A dimension whose attributes represent product information.

Scenario - A dimension whose attributes represent planning or strategic

analysis information.

Quantitative - A dimension whose attributes represent quantitative information.

Utility - A dimension whose attributes represent miscellaneous information.

Currency - This type of dimension contains currency data and metadata.

Rates - A dimension whose attributes represent currency rate information.

Channel - A dimension whose attributes represent channel information.

Promotion - A dimension whose attributes represent marketing promotion

information.

According to dimension modeling there are different types of dimnesions:

Conformed dimension

A conformed dimension is a set of data attributes that have been physically implemented in multiple database tables using the same structure, attributes, domain values, definitions and concepts in each implementation. A conformed dimension cuts across many facts.

Dimensions are conformed when they are either exactly the same (including keys) or one is a perfect subset of the other. Most important, the row headers produced in the answer sets from two different conformed dimensions must be able to match perfectly.

Conformed dimensions are either identical or strict mathematical subsets of the most granular, detailed dimension. Dimension tables are not conformed if the attributes are labeled differently or contain different values. Conformed dimensions come in several different flavors. At the most basic level, conformed dimensions mean exactly the same thing with every possible fact table to which they are joined. The date dimension table connected to the sales facts is identical to the date dimension connected to the inventory facts.

Junk dimension

A junk dimension is a convenient grouping of typically low-cardinality flags and indicators. By creating an abstract dimension, these flags and indicators are removed from the fact table while placing them into a useful dimensional framework. A Junk Dimension is a dimension table consisting of attributes that do not belong in the fact table or in any of the existing dimension tables. The nature of these attributes is usually text or various flags, e.g. non-generic comments or just simple yes/no or true/false indicators. These kinds of attributes are typically remaining when all the obvious dimensions in the business process have been identified and thus the designer is faced with the challenge of where to put these attributes that do not belong in the other dimensions.

One solution is to create a new dimension for each of the remaining attributes, but due to their nature, it could be necessary to create a vast number of new dimensions resulting in a fact table with a very large number of foreign keys. The designer could also decide to leave the remaining attributes in the fact table but this could make the row length of the table unnecessarily large if, for example, the attributes is a long text string.

The solution to this challenge is to identify all the attributes and then put them into one or several Junk Dimensions. One Junk Dimension can hold several true/false or yes/no indicators that have no correlation with each other, so it would be convenient to convert the indicators into a more describing attribute. An example would be an indicator about whether a package had arrived, instead of indicating this as “yes” or “no”, it would be converted into “arrived” or “pending” in the junk dimension. The designer can choose to build the dimension table so it ends up holding all the indicators occurring with every other indicator so that all combinations are covered. This sets up a fixed size for the table itself which would be 2^x rows, where x is the number of indicators. This solution is appropriate in situations where the designer would expect to encounter a lot of different combinations and where the possible combinations are limited to an acceptable level. In a situation where the number of indicators are large, thus creating a very big table or where the designer only expect to encounter a few of the possible combinations, it would be more appropriate to build each row in the junk dimension as new combinations are encountered. To limit the size of the tables, multiple junk dimensions might be appropriate in other situations depending on the correlation between various indicators.

Junk dimensions are also appropriate for placing attributes like non-generic comments from the fact table. Such attributes might consist of data from an optional comment field when a customer places an order and as a result will probably be blank in many cases. Therefore the junk dimension should contain a single row representing the blanks as a surrogate key that will be used in the fact table for every row returned with a blank comment field.

A dimension key, such as a transaction number, invoice number, ticket number, or bill-of-lading number, that has no attributes and hence does not join to an actual dimension table. Degenerate dimensions are very common when the grain of a fact table represents a single transaction item or line item because the degenerate dimension represents the unique identifier of the parent. Degenerate dimensions often play an integral role in the fact table's primary key.

Role-playing dimensions

Dimensions are often recycled for multiple applications within the same database. For instance, a "Date" dimension can be used for "Date of Sale", as well as "Date of Delivery", or "Date of Hire". This is often referred to as a "role-playing dimension".

Degenerated Dimension (DD):

An item that is in the fact table but is stripped off of its description, because the description belongs in dimension table, is referred to as Degenerated Dimension. Since it looks like dimension, but is really in fact table and has been degenerated of its description, hence is called degenerated dimension. Now coming to the slowly changing dimensions (SCD) and Slowly Growing Dimensions (SGD): I would like to classify them to be more of an attributes of dimensions its self.

Although other might disagree to this view but Slowly Changing Dimensions are basically those dimensions whose key value will remain static but description might change over the period of time. For example, the product id in a companies, product line might remain the same, but the description might change from time to time, hence, product dimension is called slowly changing dimension.

Lets consider a customer dimension, which will have a unique customer id but the customer name (company name) might change periodically due to buy out / acquisitions, Hence, slowly changing dimension, as customer number is static but customer name is changing, However, on the other hand the company will add more customers to its existing list of customers and it is highly unlikely that the company will acquire astronomical number of customer over night (wouldn’t the company CEO love that) hence, the customer dimension is both a Slowly changing as well as slowly growing dimension.

Subscribe to:

Comments (Atom)

.png)